One of the most confusing with Oracle replication tools is the inability to track changes of tables owned by SYS, SYSTEM or CTXSYS.

Many system tables are internally using cluster type. Since the latest version OpenLogReplicator supports cluster tables it is now possible track also tables in SYS schema and send changes as events to Kafka.

Now it is possible to use:

"tables": [

{"table": "SYS.%"}

]Let’s check if there are any technical difficulties to process with such replication. Let’s check some scenarios.

1. User logs in to the database

This produces the following JSON message to Kafka:

{"scn":115510975,"timestamp":1593145820000,"xid":"9.18.154699",dml:[{"operation":"update","table":"SYS.USER$","rowid":"AAAAAKAABAAAADRAAK","before":{"SPARE6":"2020-06-26T04:25:55"},"after":{"SPARE6":"2020-06-26T04:30:20"}}]}As seen – when the user SYSTEM logs in some SPARE6 column (which holds last login date is updated in SYS.USER$. Since SYS.USER$ does not contain supplemental log group for primary key – you can locate the user only by rowid.

2. User creates a table

Let’s create a very simple table like:

SQL> create table usr1.tab(col1 int, col2 varchar2(20));This creates the following JSON message to Kafka:

{"scn":115513807,"timestamp":1593147364000,"xid":"9.8.154640",dml:[

{"operation":"insert","table":"SYS.OBJ$","rowid":"AAAAASAABAAANuJAAt","after":{"OBJ#":33517,"DATAOBJ#":33517,"OWNER#":89,"NAME":"TAB","NAMESPACE":1,"TYPE#":2,"CTIME":"2020-06-26T06:56:04","MTIME":"2020-06-26T06:56:04","STIME":"2020-06-26T06:56:04","STATUS":1,"FLAGS":0,"SPARE1":6,"SPARE2":1,"SPARE3":89,"SPARE7":0,"SPARE8":0,"SPARE9":0,"DFLCOLLID":16382}},

{scn":115513807,"operation":"insert","table":"SYS.DEFERRED_STG$","rowid":"AAAABRAABAAAAP7AA3","after":{"OBJ#":33517,"FLAGS_STG":8,"CMPFLAG_STG":0,"CMPLVL_STG":0,"FLAGS2_STG":0}},

{"scn":115513807,"operation":"insert","table":"SYS.TAB$","rowid":"AAAAACAABAAAOJSAAF","after":{"OBJ#":33517,"DATAOBJ#":33517,"TS#":4,"FILE#":0,"BLOCK#":0,"COLS":2,"PCTFREE$":10,"PCTUSED$":40,"INITRANS":1,"MAXTRANS":255,"FLAGS":1073741825,"AUDIT$":"--------------------------------------","INTCOLS":2,"KERNELCOLS":2,"PROPERTY":17716740096,"TRIGFLAG":0,"SPARE1":736,"SPARE6":"2020-06-26T04:56:04","ACDRFLAGS":0,"ACDRTSOBJ#":0,"ACDRROWTSINTCOL#":0}},

{"scn":115513807,"operation":"insert","table":"SYS.COL$","rowid":"AAAAACAABAAAOJSAAr","after":{"OBJ#":33517,"COL#":1,"SEGCOL#":1,"SEGCOLLENGTH":22,"OFFSET":0,"NAME":"COL1","TYPE#":2,"LENGTH":22,"FIXEDSTORAGE":0,"SCALE":0,"NULL$":0,"INTCOL#":1,"PROPERTY":0,"CHARSETID":0,"CHARSETFORM":0,"EVALEDITION#":0,"SPARE1":0,"SPARE2":0,"SPARE3":0,"SPARE9":0,"SPARE10":0}},

{"scn":115513807,"operation":"insert","table":"SYS.COL$","rowid":"AAAAACAABAAAOJSAAs","after":{"OBJ#":33517,"COL#":2,"SEGCOL#":2,"SEGCOLLENGTH":20,"OFFSET":0,"NAME":"COL2","TYPE#":1,"LENGTH":20,"FIXEDSTORAGE":0,"NULL$":0,"INTCOL#":2,"PROPERTY":0,"CHARSETID":873,"CHARSETFORM":1,"EVALEDITION#":0,"SPARE1":0,"SPARE2":0,"SPARE3":20,"COLLID":16382,"SPARE9":0,"SPARE10":0}},

{"scn":115513807,"operation":"update","table":"SYS.OBJ$","rowid":"AAAAASAABAAANuJAAt","before":{"SIGNATURE":null},"after":{"SIGNATURE":"4c46c72998cc773580d1b457b5a12d42"}}]}Please mind that this is all – one single transaction. I have visually divided the DML operations, but they occur in one single transaction.

The create table produces:

- one INSERT to SYS.OBJ$

- one INSERT to SYS.DEFERRED_STG$

- one INSERT to SYS.TAB$

- few INSERTS to SYS.COL$ – one for every column

- one UPDATE to SYS.OBJ$ – updating the SIGNATURE column

3. Inserting data into table

Let’s just insert a row into the table:

SQL> insert into usr1.tab values(1,'2');

SQL> commit;Since this is a first insert – this also produces changes in SYS schema tables:

{"scn":115514454,"timestamp":1593147768000,"xid":"8.3.155753",dml:[

{"operation":"delete","table":"SYS.SEG$","rowid":"AAAAAIAABAAAMQJAAZ","before":{"TS#":0,"FILE#":1,"BLOCK#":56440}}]}

{"scn":115514458,"timestamp":1593147768000,"xid":"8.2.155756",dml:[

{"operation":"insert","table":"SYS.SEG$","rowid":"AAAAAIAABAAAMQJAAd","after":{"TS#":4,"FILE#":4,"BLOCK#":26090,"TYPE#":3,"BLOCKS":8,"EXTENTS":1,"INIEXTS":8,"MINEXTS":1,"MAXEXTS":2147483645,"EXTSIZE":128,"EXTPCT":0,"USER#":89,"LISTS":0,"GROUPS":0,"BITMAPRANGES":2147483645,"CACHEHINT":0,"SCANHINT":0,"HWMINCR":33517,"SPARE1":4325761}}]}

{"scn":115514460,"timestamp":1593147768000,"xid":"8.29.155695",dml:[

{"operation":"delete","table":"SYS.DEFERRED_STG$","rowid":"AAAABRAABAAAAP7AA3","before":{"OBJ#":33517,"FLAGS_STG":8,"CMPFLAG_STG":0,"CMPLVL_STG":0,"FLAGS2_STG":0}},

{"operation":"update","table":"SYS.TAB$","rowid":"AAAAACAABAAAOJSAAF","before":{"FILE#":0,"BLOCK#":0,"PROPERTY":17716740096,"ACDRFLAGS":0,"ACDRTSOBJ#":0},"after":{"FILE#":4,"BLOCK#":26090,"PROPERTY":536870912,"ACDRFLAGS":null,"ACDRTSOBJ#":null}},

{"operation":"update","table":"SYS.SEG$","rowid":"AAAAAIAABAAAMQJAAd","before":{"TYPE#":3,"SPARE1":4325761},"after":{"TYPE#":5,"SPARE1":4325633}}]}Once the first row is inserted – segment allocation occurs and this is also reflected in SYS.SEG$, SYS.TAB$, SYS.DEFERRED_STG$, without going into details.

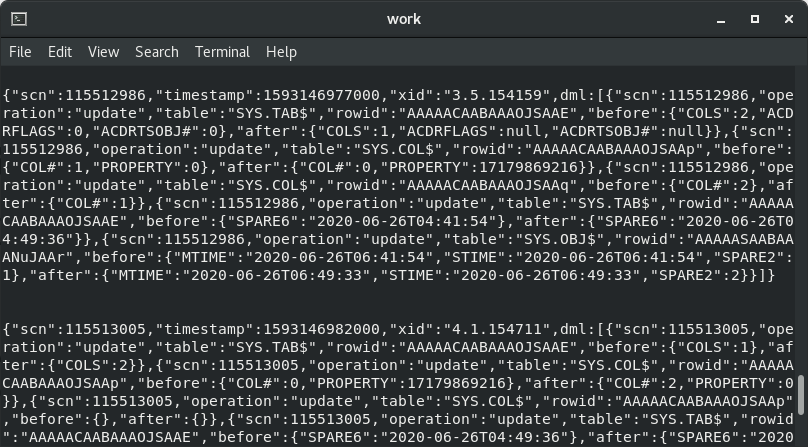

4. Adding column to a table

Let’s just stick to simple column with null:

SQL> alter table usr1.tab add col3 timestamp null;This produces the following JSON message in Kafka:

{"scn":115515348,"timestamp":1593148263000,"xid":"6.16.154777",dml:[

{"operation":"update","table":"SYS.TAB$","rowid":"AAAAACAABAAAOJSAAF","before":{"COLS":2,"INTCOLS":2,"KERNELCOLS":2},"after":{"COLS":3,"INTCOLS":3,"KERNELCOLS":3}},

{"operation":"insert","table":"SYS.COL$","rowid":"AAAAACAABAAAOJSAAt","after":{"OBJ#":33517,"COL#":3,"SEGCOL#":3,"SEGCOLLENGTH":11,"OFFSET":0,"NAME":"COL3","TYPE#":180,"LENGTH":11,"FIXEDSTORAGE":0,"SCALE":6,"NULL$":0,"INTCOL#":3,"PROPERTY":0,"CHARSETID":0,"CHARSETFORM":0,"EVALEDITION#":0,"SPARE1":6,"SPARE2":0,"SPARE3":0,"SPARE9":0,"SPARE10":0}},

{"operation":"update","table":"SYS.OBJ$","rowid":"AAAAASAABAAANuJAAt","before":{"MTIME":"2020-06-26T06:56:04","STIME":"2020-06-26T06:56:04","SPARE2":1,"SIGNATURE":"4c46c72998cc773580d1b457b5a12d42"},"after":{"MTIME":"2020-06-26T07:11:01","STIME":"2020-06-26T07:11:01","SPARE2":2,"SIGNATURE":"9301ecc251c8ad6748d5c829e9b73da3"}}]}All changes are done in one transaction, which includes:

- one UPDATE of SYS.TAB$ row with information about number of total columns

- one INSERT to SYS.COL$ with the new column definition

- one UPDATE to SYS.OBJ$ with the information that SIGNATURE column changed, and MTIME and STIME got updated with current timestamp

5. Dropping a table

The final task is a drop (without purge) operation.

SQL> drop table usr1.tab;This produced the following JSON message ti Kafka stream:

{"scn":115515760,"timestamp":1593152113000,"xid":"4.31.154713",dml:[

{"operation":"insert","table":"SYS.RECYCLEBIN$","rowid":"AAAAEGAABAAAAkRAAY","after":{"OBJ#":33517,"OWNER#":89,"ORIGINAL_NAME":"TAB","OPERATION":0,"TYPE#":1,"TS#":4,"FILE#":4,"BLOCK#":26090,"DROPTIME":"2020-06-26T07:15:13","DROPSCN":115515746,"FLAGS":30,"RELATED":33517,"BO":33517,"PURGEOBJ":33517,"SPACE":8,"CON#":0}},

{"operation":"delete","table":"SYS.OBJ$","rowid":"AAAAASAABAAANuJAAt","before":{"OBJ#":33517,"DATAOBJ#":33517,"OWNER#":89,"NAME":"TAB","NAMESPACE":1,"TYPE#":2,"CTIME":"2020-06-26T06:56:04","MTIME":"2020-06-26T07:11:01","STIME":"2020-06-26T07:11:01","STATUS":1,"FLAGS":0,"SPARE1":6,"SPARE2":2,"SPARE3":89,"SIGNATURE":"9301ecc251c8ad6748d5c829e9b73da3","SPARE7":0,"SPARE8":0,"SPARE9":0,"DFLCOLLID":16382}},

{"operation":"insert","table":"SYS.OBJ$","rowid":"AAAAASAABAAANuJAAr","after":{"OBJ#":33517,"DATAOBJ#":33517,"OWNER#":89,"NAME":"BIN$qPaFWPS9YE\/gUxIKqMDR9g==$0","NAMESPACE":1,"TYPE#":2,"CTIME":"2020-06-26T06:56:04","MTIME":"2020-06-26T07:15:13","STIME":"2020-06-26T07:15:13","STATUS":1,"FLAGS":128,"SPARE1":6,"SPARE2":3,"SPARE3":89,"SPARE7":0,"SPARE8":0,"SPARE9":0,"DFLCOLLID":16382}}]}

{"scn":115515764,"timestamp":1593148513000,"xid":"5.29.154536",dml:[

{"operation":"update","table":"SYS.SEG$","rowid":"AAAAAIAABAAAMQJAAd","before":{"SPARE1":4325633},"after":{"SPARE1":4391169}}]}There were 2 transactions generated. The first one is:

- one INSERT operation to SYS.RECYCLEBIN$ table

- one DELETE operation from SYS.OBJ$

- one INSERT operation to SYS.OBJ$

Columns table is not touched. As you can clearly see I have recycle bin active in my database.

I could make tests over and over checking various operations, but this is not the goal here. The goal was to check if SYS schema objects could be tracked with OpenLogReplicator.

This concludes the tests. If you have some ideas where SYS, SYSTEM tables tracking can be used – please feel free to write comments and share this post in social media.